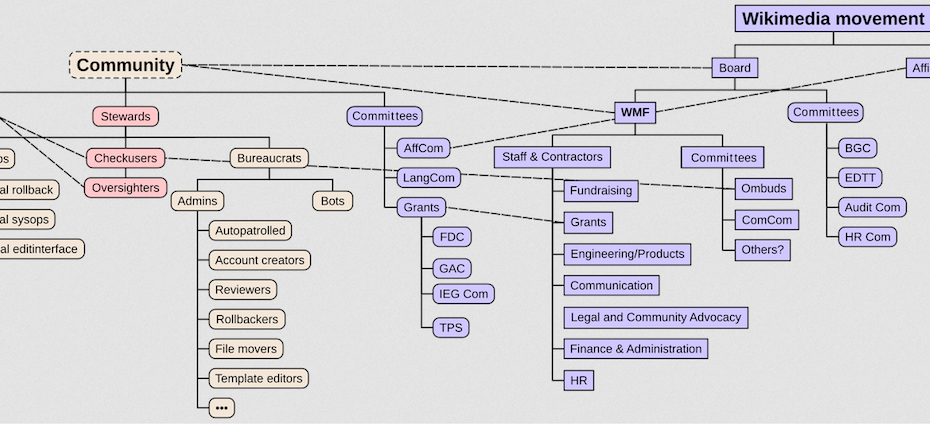

Just before the summer recess, the European Parliament’s Internal Market and Consumer Protection committee released over 1300 pages of amendments to the EU’s foremost content moderation law. It took the summer to delve into the suggestions and are ready to kick off the new Parliamentary season by sharing some thoughts on them. Our main focus remains on how responsible communities can continue to be in control of online projects like Wikipedia, Wikimedia Commons and Wikidata.

1. The Greens/EFA on “manifestly illegal content”

AM 691 by Alexandra Geese on behalf of the Greens/EFA Group

Article 2 – paragraph 1 – point g a (new)

‘manifestly illegal content’ means any information which has been subject of a specific ruling by a court or administrative authority of a Member State or where it is evident to a layperson, without any substantive analysis, that the content is in not in compliance with Union law or the law of a Member State;

Almost any content moderation system will require editors or service providers to assess content and make ad-hoc decisions on whether something is illegal and therefore needs to be removed or not. Of course, things aren’t always black-and-white and sometimes it takes a while to make the right decision, like with leaked images of Putin’s Palace. Other times it is immediately clear that something is an infringement, like a verbatim copy of a hit song, for instance. In order to recognise these differences the DSA rightfully uses the term “manifestly illegal”, but if fails to actually give a definition thereof. We agree with Alexandra Geese and the Greens/EFA Group that the wording of Recital 47 should make it into the definitions.

Read More »DSA in imco: Three amendments we like and one that surprised us